Some features that are missing are options to manage multiple backup jobs using the graphical user interface, compression settings, or options to control network transfers. Closing Wordsĭuplicacy is a basic file level backup program that ships with interesting under the hood options. Some of the options provided are to backup to a different storage location, use hash file comparison instead of size and timestamp comparison, or assign a tag to a backup for identification purposes.Ī guide on Github lists commands and the options they ship with. As far as I can tell from reading the forums, my immutability period is quite long.

Backup Job: Retention Policy: 50 days (no GFS, might enable this later) Backup Copy Job which copies from the SOBR to USB rotated drives which are replaced daily.

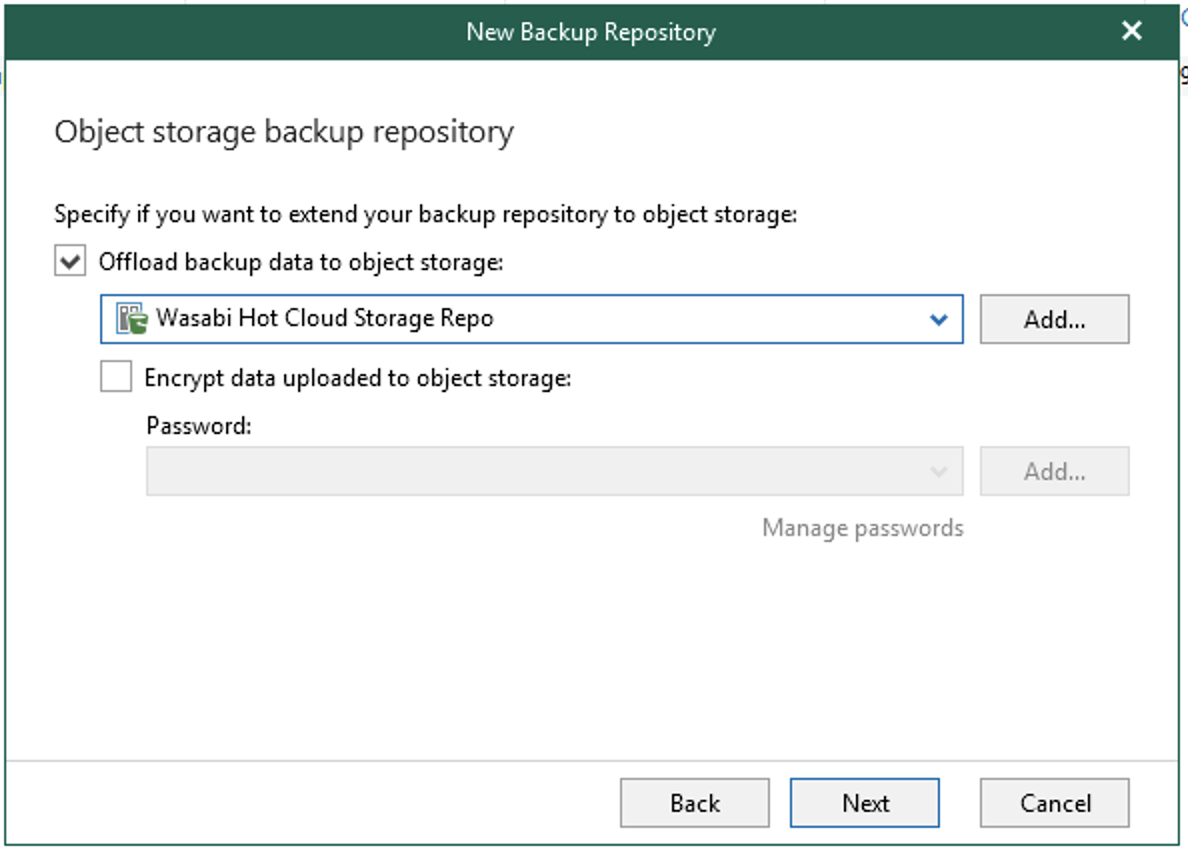

Restore is built right into the GUI, but also supported using the command line.Įxperienced users may use commands for better control and additional features that the gui version does not offer. For the Capacity Tier (Wasabi): Make recent backups immutable for 90 days. It supports deduplication to further that goal. To the question above, wasabi will charge for 39 TB of deleted storage and 1 TB of active storage in your example above. Please contact wasabi support ( ) to request this change. The program uses incremental backups to keep the required storage requirements for the backup jobs as low as possible. Wasabi standard object policy is 90 days, Due to Wasabi's strategic relationship with Veeam, we have reduced that to 30 days. This encrypts not only file contents but also file paths, sizes and other information. Thanks.Backups may be encrypted with a password. I briefly looked at that commit, it does seem like it would have helped. NFC loyalty / payment card, Telegram Data Retention Policy, Bad Code(). I downloaded them and put them in the proper directories and everything seems to check out now. IPv6, Network Attacks, Onion Share, Briar Project, Creds, Duplicacy - 2020-10. I actually did have several chunks missing. I guess if you have one of his commit ( ) you won't need to copy over the snapshots from Wasabi to local.

recently made a few improvements on the copy command. Great! I think the copy command is another differential features against other backup tools (other than cross-client deduplication). Now when I run the copy command it finishes in seconds.Īnd I'm running duplicacy check on the local storage just in case there are chunks missing. In addition to the config file, I also needed to copy the snapshots from Wasabi to my local storage. I realize there's no reason for you to have optimized for this case but it sure would be nice if it did things in the reverse order, checking for the presence of a chunk before downloading it. So it must be downloading the chunks, THEN realizing that they don't need to be copied because they're already present in the destination. I looked at the network monitor and it's using all of the bandwidth Wasabi will let me have, ~8 Mbps. Now I'm running duplicacy copy from Wasabi to local and I expected it to fly because all the chunks it needs to create are already in the local storage. (In reality what I did is slightly more complicated than this but the outcome is the same as if I had created a new local storage and populated it with the chunks from Wasabi - in the required unflattened form). Because the directory I am backing up didn't change and the chunk parameters and encryption keys are the same, this created all the same chunks that are on Wasabi but instead of taking a week it only took about 6.5 hours. Then I created a new backup into the new local storage. I didn't want to wait for this, so I wiped out my new local storage, created a new one, and I copied the config file from the Wasabi storage into this new local storage.

#Duplicacy wasabi retention policy how to

Wasabi must throttle downloads though because I'm not getting even 8 Mbps down and I was saturating my 15 Mbps upload when I did the backup initially. A short recap for myself how to setup Duplicacy ( ) CLI on Linux to backup to Wasabi ( ): Get duplicacy and duplicacy-util binaries from below Press J to jump to the feed. I used duplicacy add and duplicacy copy to start copying the Wasabi snapshots into a new local storage. Now I want to create a local backup with the same contents. All issues Duplicacy copy seems much slower than it should be.

0 kommentar(er)

0 kommentar(er)